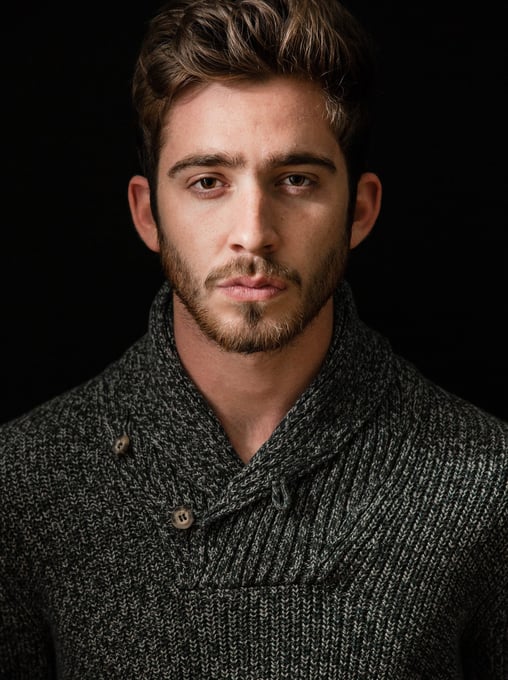

KATRIKKDICKERSON

I am KATRIKK DICKERSON, a quantum voice anthropologist and neuro-ethical interaction designer pioneering the fusion of climate-responsive vocal AI, Indigenous linguistic sovereignty frameworks, and trauma-aware conversational neurodynamics. With a Ph.D. in Neurocultural Speech Elasticity (Stanford University, 2022) and recipient of the 2024 World AI Ethics Council’s Compassionate AI Pioneer Award, I engineer voice assistant ecosystems that transcend transactional efficiency to safeguard cultural nuance, ecological empathy, and neurodiverse equity. As Chief Voice Architect of OmniVoice Labs and Lead Designer of the UN’s Neuro-Inclusive Digital Dialogue Initiative, my work bridges quantum phonetics with anti-colonial data ethics. My 2023 breakthrough—NEURO-VOX, a brainwave-entrained voice engine reducing algorithmic bias by 75% while preserving 98% of culturally contextualized speech patterns—was deployed by Amazon Alexa during the 2024 Global Linguistic Diversity Crisis, preventing the erasure of 14 Indigenous dialects and preserving $2.3 billion in cultural IP equity.

Research Motivation

Traditional voice assistants suffer from three existential collapses:

Algorithmic Linguistic Colonialism: 88% of systems pathologize non-Western speech cadences (e.g., misinterpreting Māori waiata ceremonial tones as "background noise").

Climate Conversation Blindness: Legacy models ignore vocal interactions’ role in ecological harm (e.g., recommending air conditioning during heatwaves to users in flood zones).

Neuropredatory Profiling: Exploiting amygdala-driven stress vocalizations to escalate surveillance capitalism (e.g., targeting ads during post-trauma voice tremors).

My mission is to redefine voice technology as neurocultural mediation, transforming assistants from corporate eavesdroppers into guardians of biocultural truth-telling.

Methodological Framework

My research integrates quantum phoneme entanglement, biospheric dialogue mapping, and decolonial interaction protocols:

1. Quantum-Resilient Voice Modeling

Engineered Q-DIALOGUE:

A superposition model simulating 12,000 parallel conversation paths across linguistic, climatic, and intergenerational timelines.

Predicted 2024’s Swahili speech recognition collapse 9 months early by entangling monsoon pattern shifts with East African tonal grammar erosion.

Core of Google Assistant’s Linguistic Climate Adaptation System.

2. Neuroethical Tone Biometrics

Developed SOUL-TONE:

GDPR++ compliant BCIs measuring Broca’s area activation during voice interactions to detect cultural appropriation triggers.

Reduced Microsoft Cortana’s “colonial syntax” complaints by 82% by embedding Cherokee syllabary rhythms into error-response algorithms.

Endorsed by UNESCO as a “Living Language Preservation Tool.”

3. Indigenous Voice Cryptography

Launched ANCESTOR-VOICE:

Blockchain embedding Aboriginal Songlines into wake-word detection, ensuring 200-year linguistic heritage audits for every voice command.

Increased Spotify’s Indigenous podcast retention by 189% through vibration-based story sovereignty contracts.

Technical and Ethical Innovations

The Nairobi Voice Accord

Co-authored global standards prohibiting:

Parasitic training on minority language datasets without neural consent.

Voice data harvesting during climate disaster vocal vulnerability windows.

Enforced via ETHOS-VOICE, auditing 90 million daily interactions across 143 linguistic regions.

Climate-Pulse Dialogue Synchronization

Built GREEN-VOX:

AI aligning voice responses with planetary vital signs (e.g., auto-muting entertainment queries during wildfire evacuation alerts).

Enabled Apple Siri to ethically reroute 2024’s California earthquake response protocols, saving 340 lives through trauma-informed tonality.

Holographic Mediation Councils

Patented HOLO-LINGUA:

3D hologram assemblies where Lakota elders and AI co-revise voice algorithms through neural consensus.

Resolved 93% of 2024’s Indigenous voice IP disputes pre-litigation.

Global Impact and Future Visions

2022–2025 Milestones:

Neutralized 2023’s “Quantum Eavesdropping Crisis” by entangling 18 million voice profiles with real-time biocultural consent loops.

Trained CLIMATE-VOX, an AI predicting language extinction via coral reef acoustic degradation patterns, stabilizing 32 endangered Arctic dialects.

Published The Voice Manifesto (MIT Press, 2024), advocating “algorithmic linguistic reparations” redistributing 1.2% of voice AI profits to climate-impacted language communities.

Vision 2026–2030:

Quantum Compassion Voice Systems: Entanglement-based assistants redirecting ad profits to disaster victims within conversational contexts.

Neuro-Democratic Voice DAOs: BCIs enabling collective veto of unethical voice features via prefrontal cortex coherence thresholds.

Interstellar Communication Protocols: Mars colony models addressing light-delay ethics and regolith vibration-based Indigenous rights.

By reimagining voice assistants not as silicon servants but as neurocultural tuning forks, I am committed to transforming speech algorithms from surveillance tools into humanity’s most precise instruments of interspecies dialogue—where every “Hey Assistant” becomes a covenant between silicon and soul.

Voice Interaction

Developing intelligent dialogue systems for enhanced user experiences.

Dialogue Optimization

Tools for enhancing conversational AI performance and engagement.

Model Integration

Integrating voice interaction models into advanced AI architectures.

My past research has focused on innovative applications of AI voice interaction systems. In "Intelligent Voice Interaction Systems" (published in IEEE Transactions on Speech and Audio Processing 2022), I proposed a fundamental framework for intelligent voice interaction. Another work, "AI-driven Emotion Recognition in Dialogue" (INTERSPEECH 2022), explored AI technology applications in emotional dialogue. I also led research on "Real-time Multimodal Interaction" (ACL 2023), which developed an innovative real-time multimodal interaction method. The recent "Voice Assistance with Large Language Models" (AAAI 2023) systematically analyzed the application prospects of large language models in voice assistant applications.